Author: Niklas Riedinger

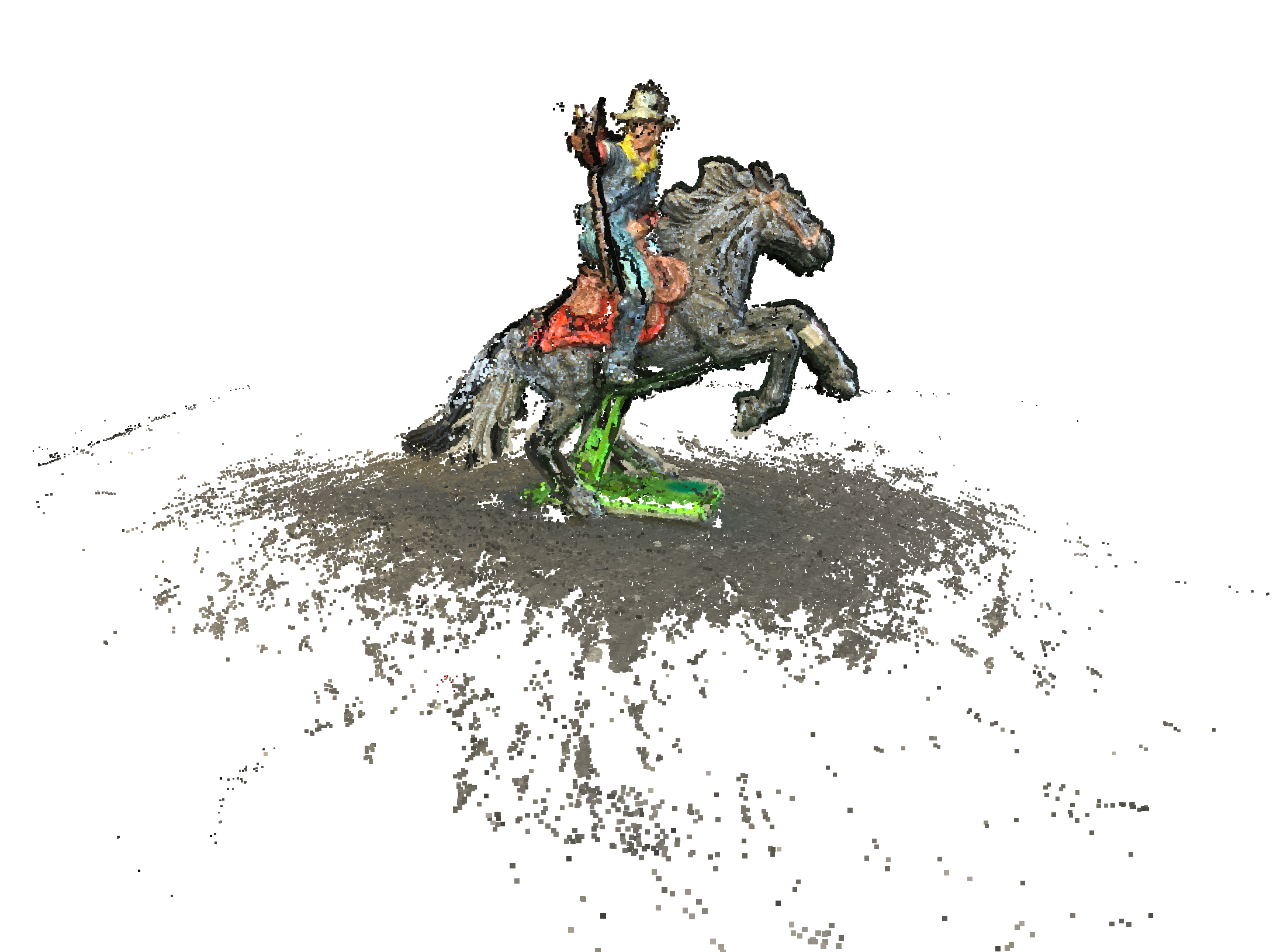

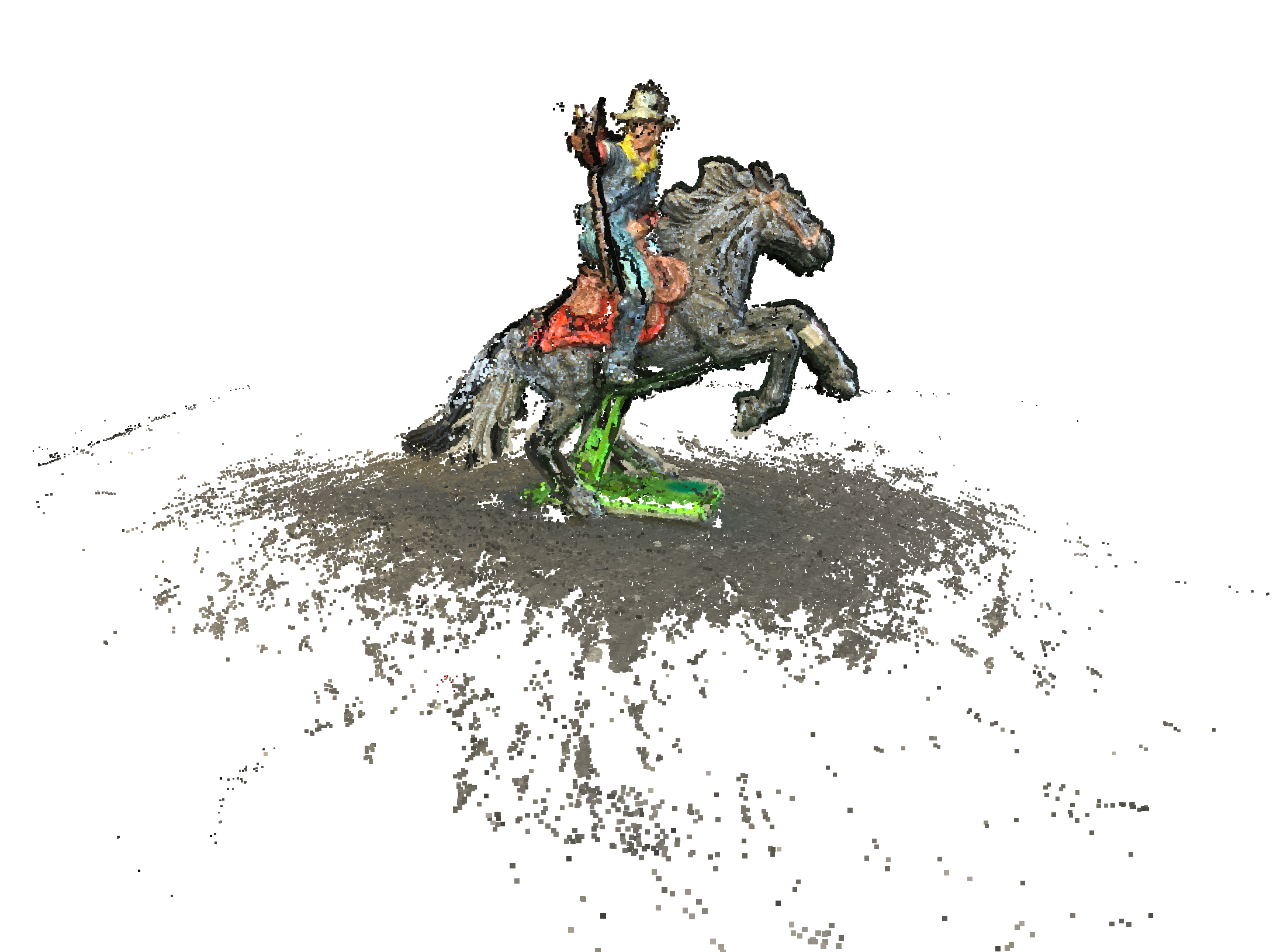

| Real object |  |

|

|

| Polycam |  |

|

|

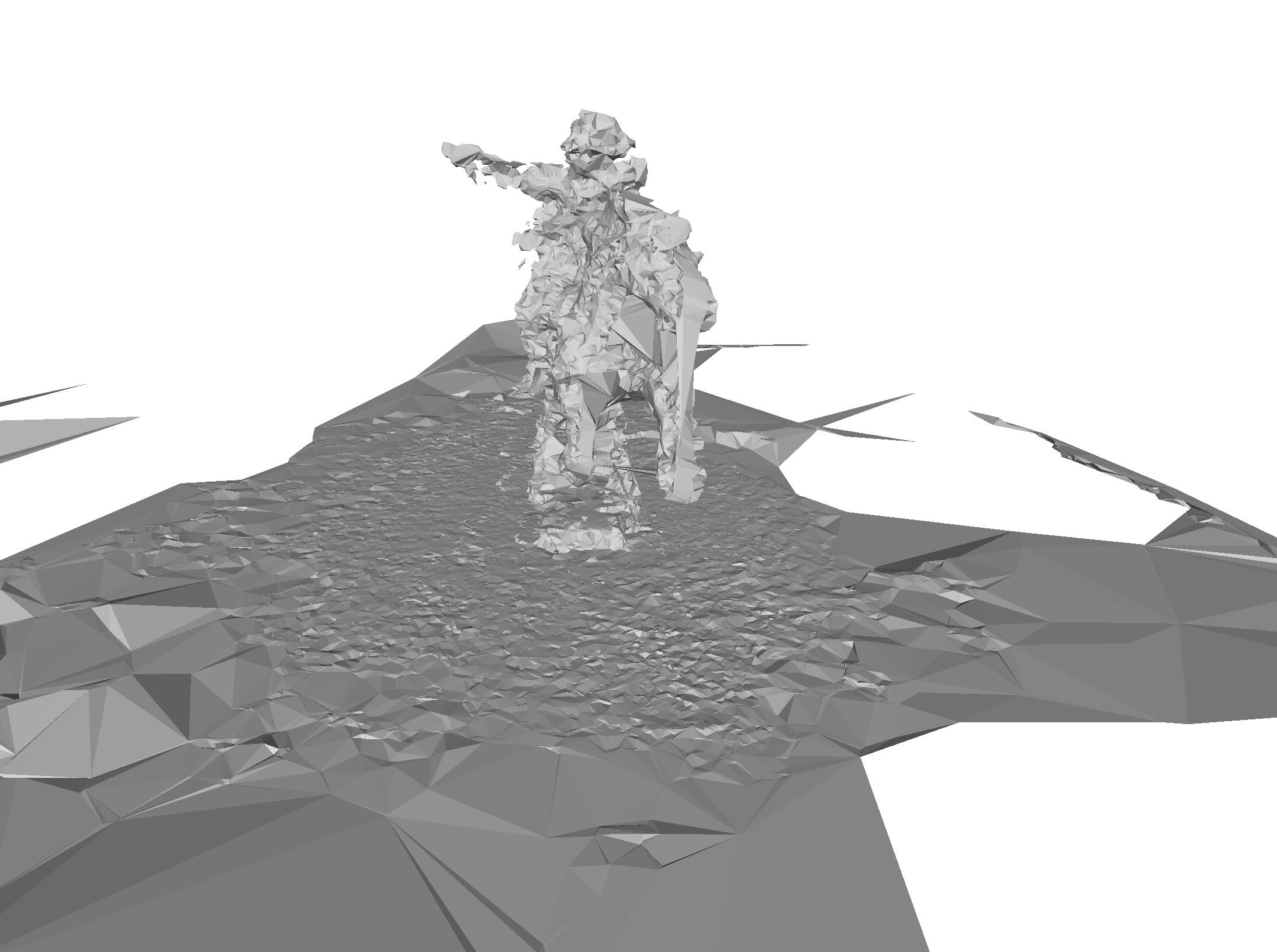

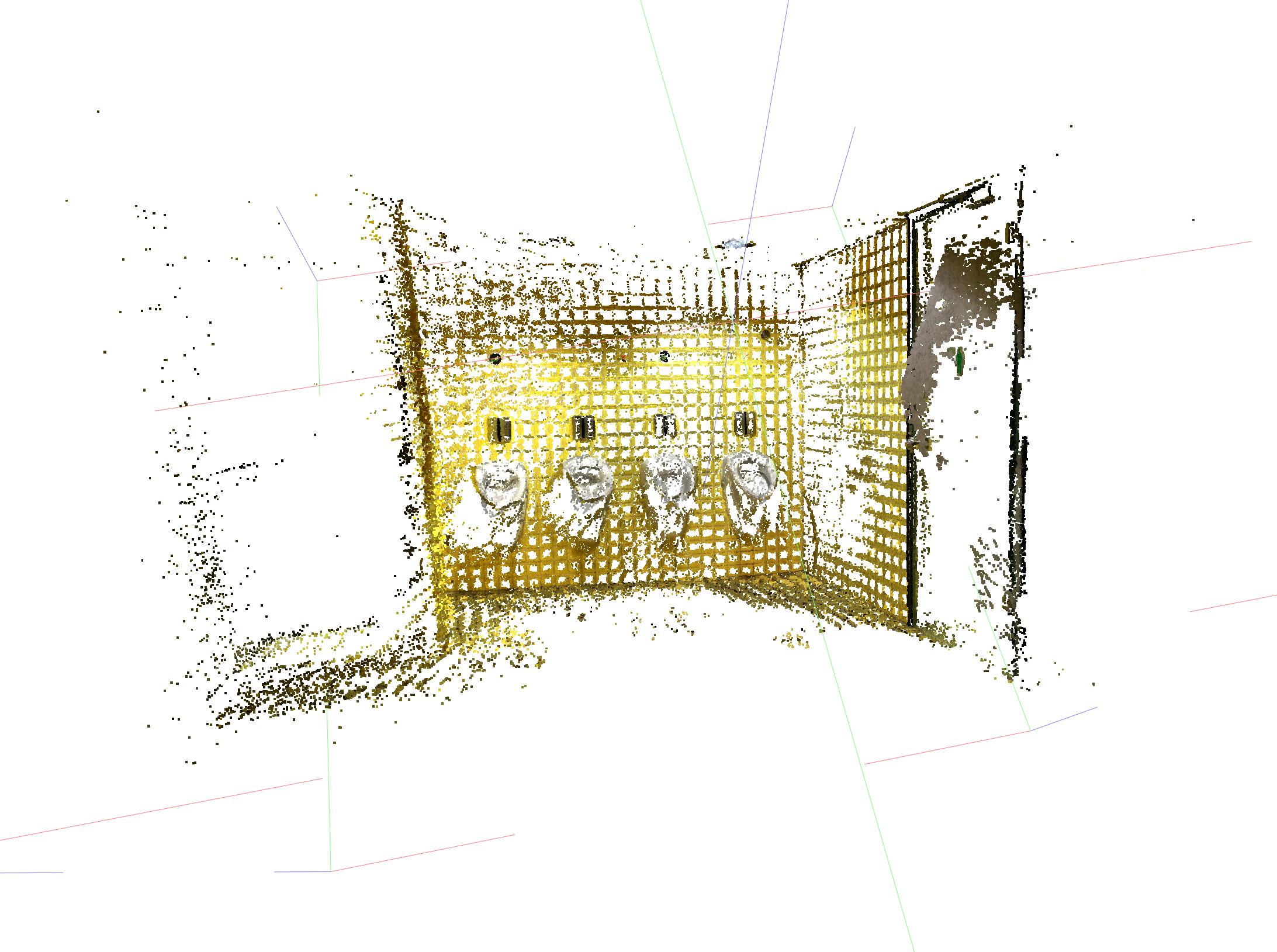

| COLMAP Pointcloud |  |

|

|

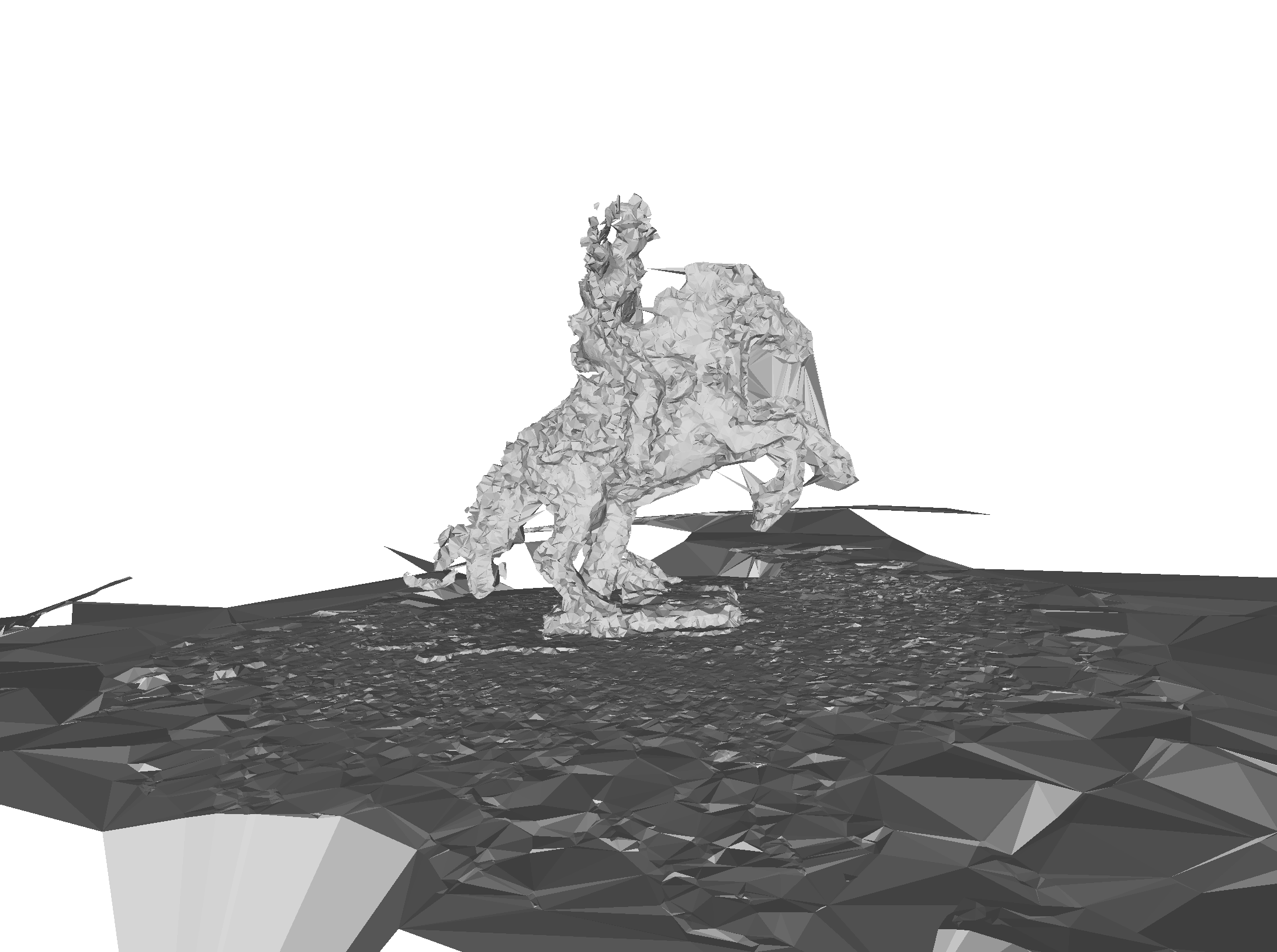

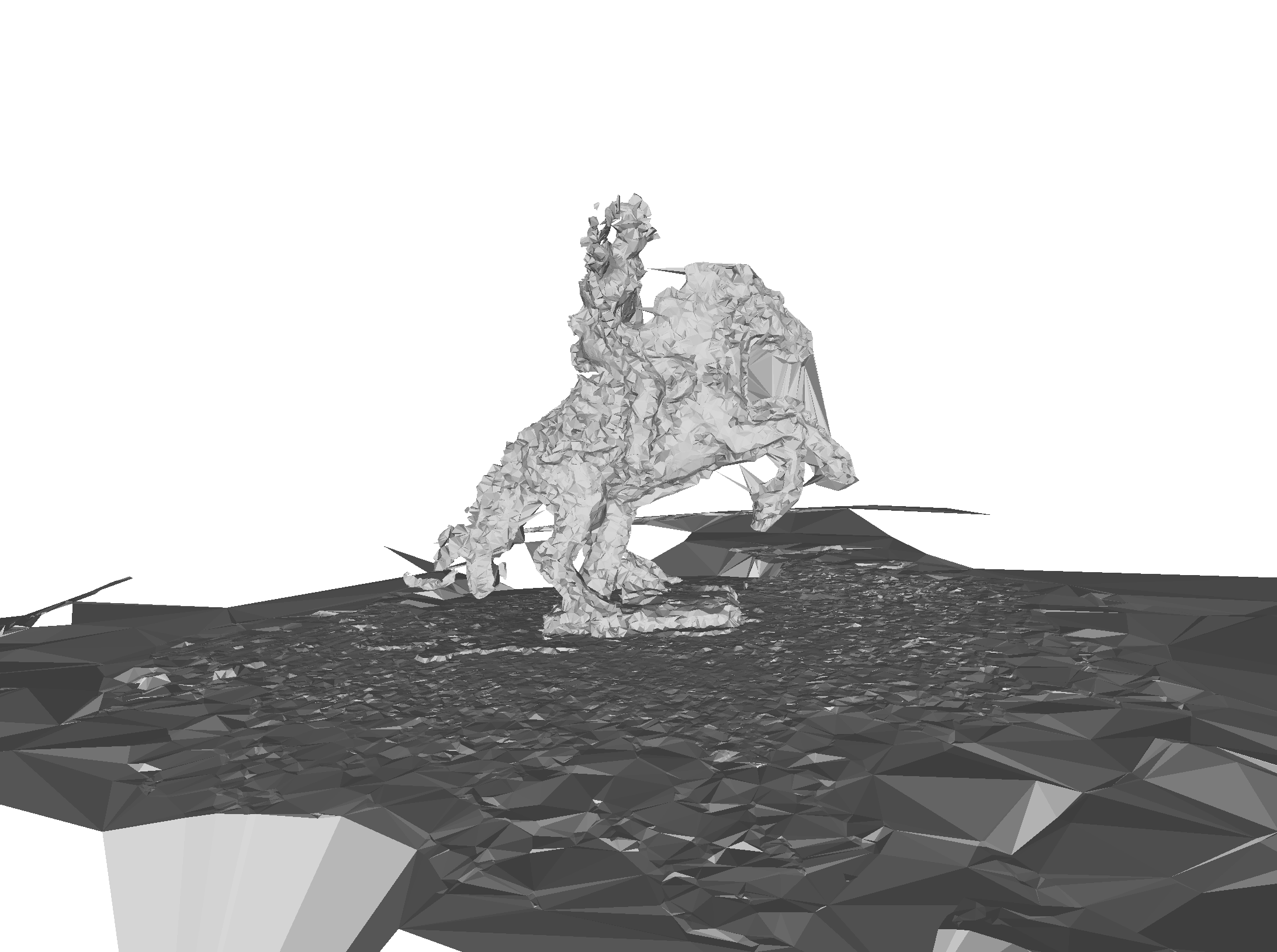

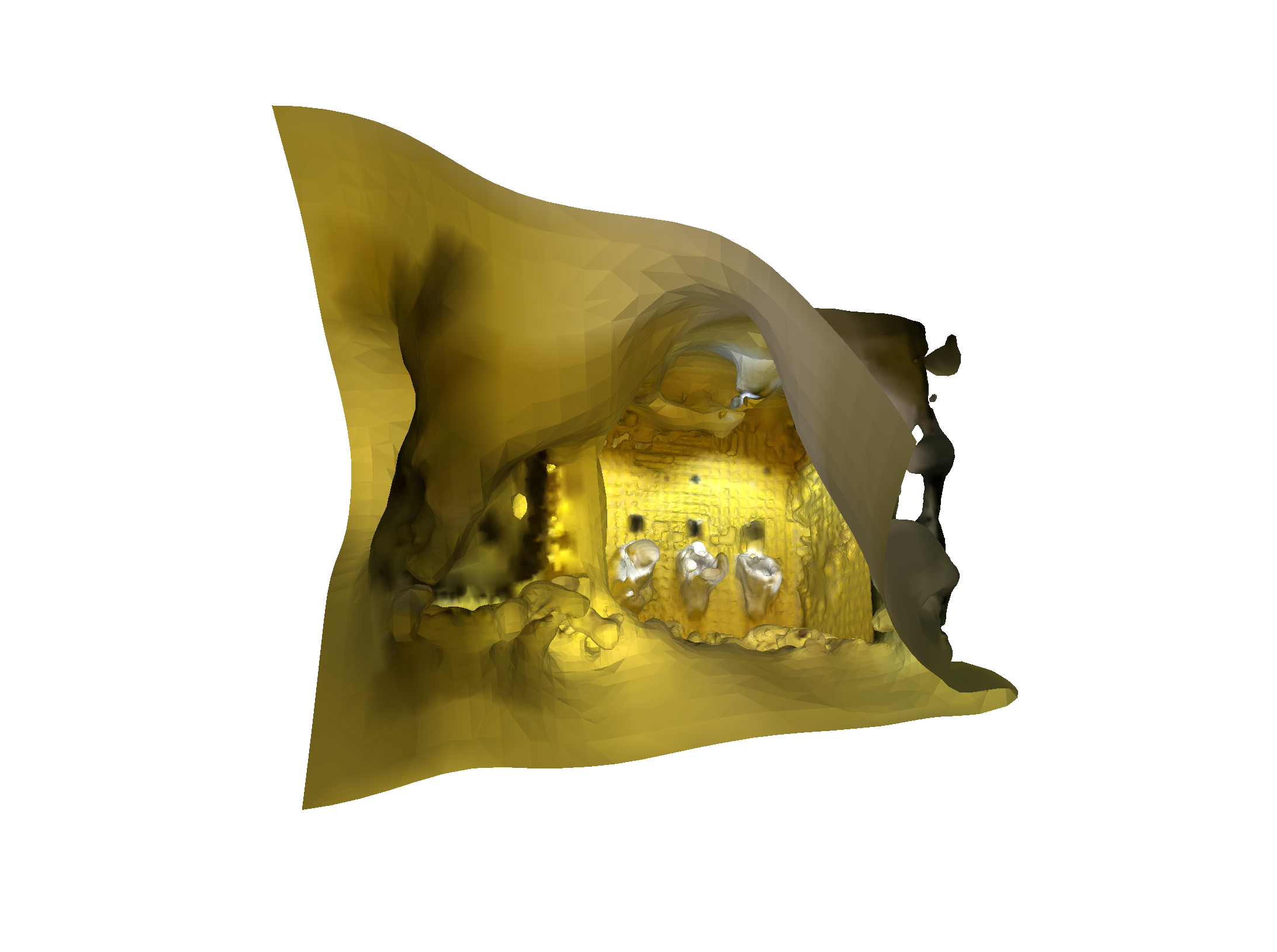

| COLMAP Delaunay |  |

|

|

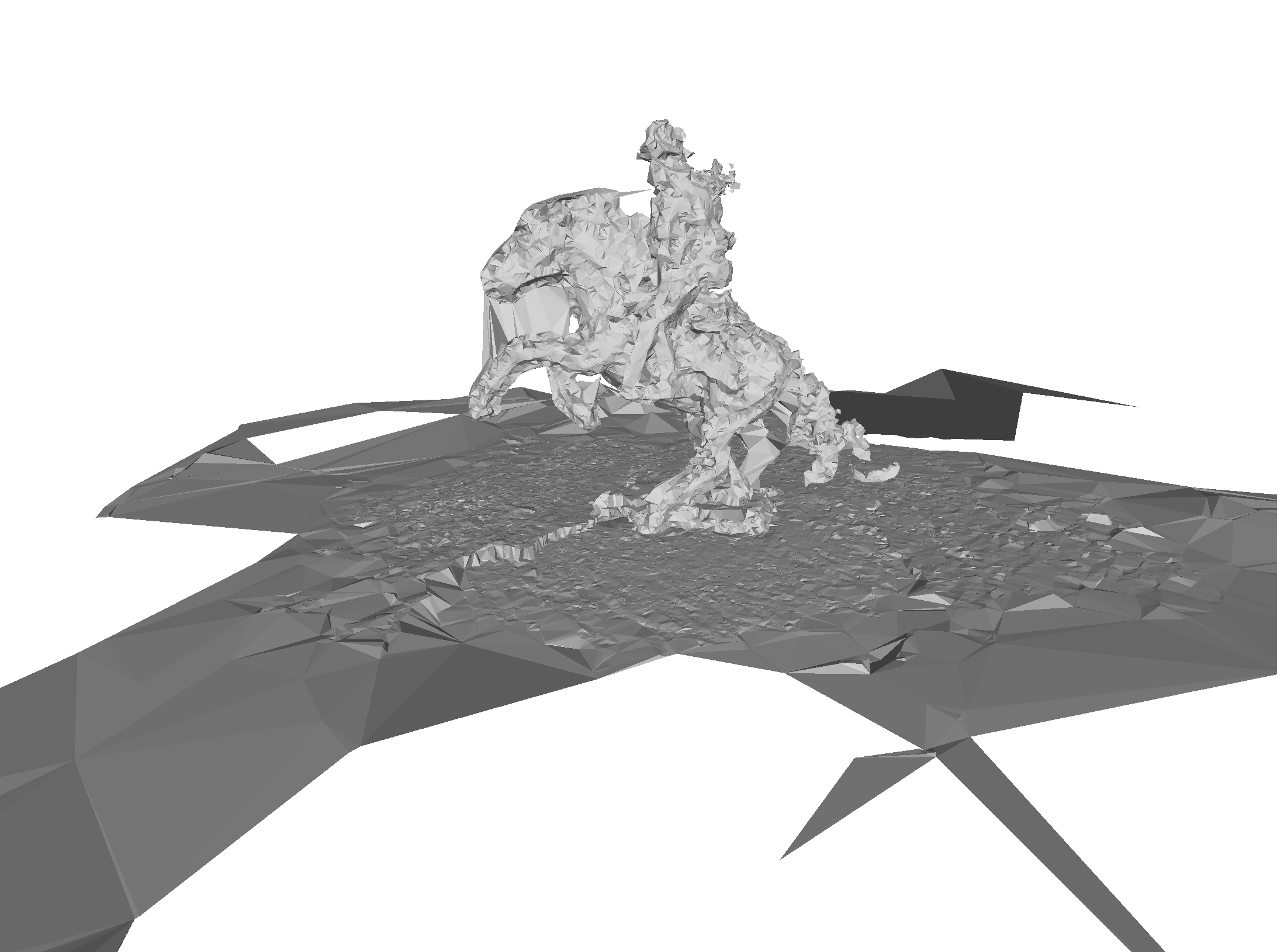

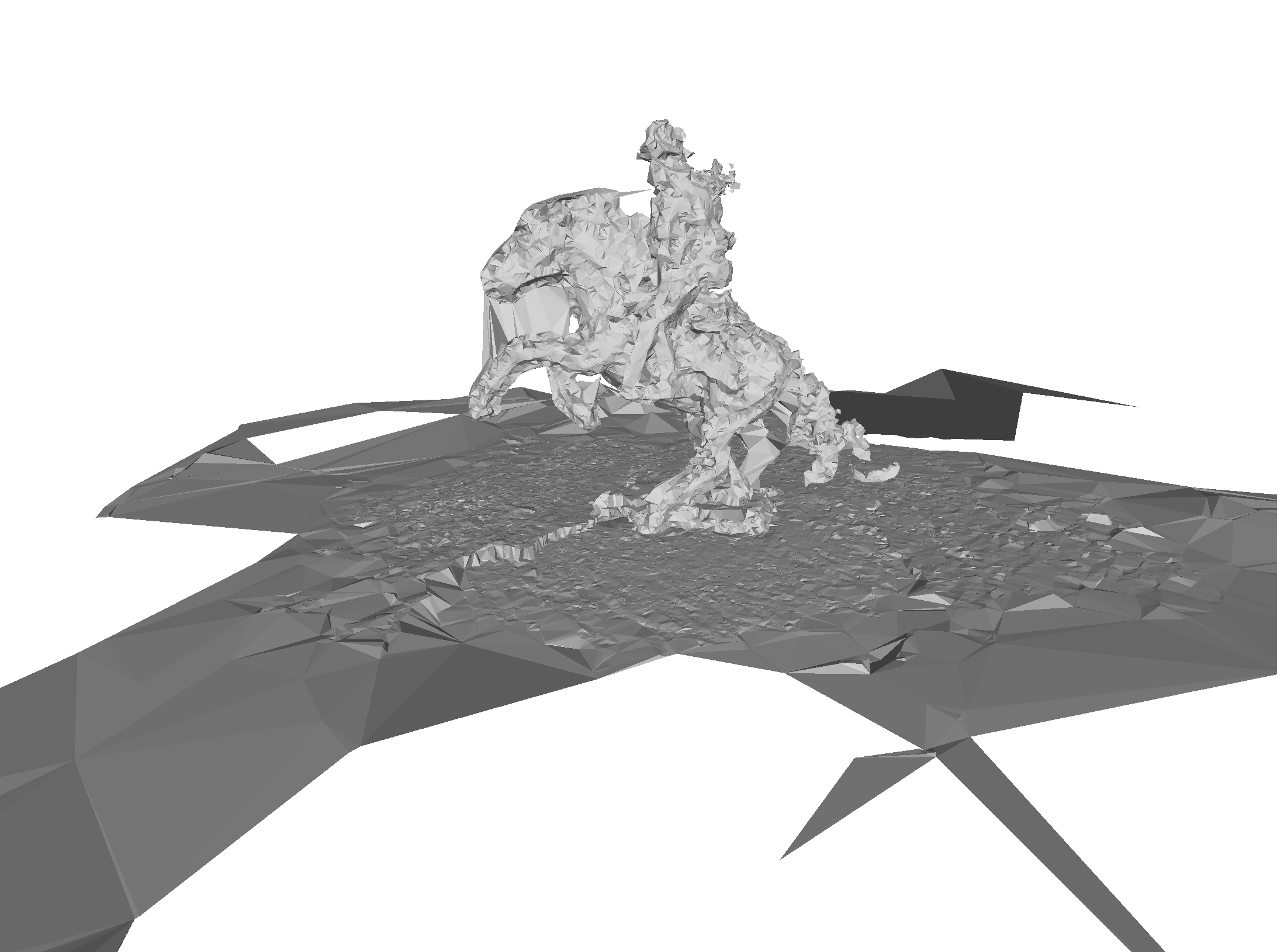

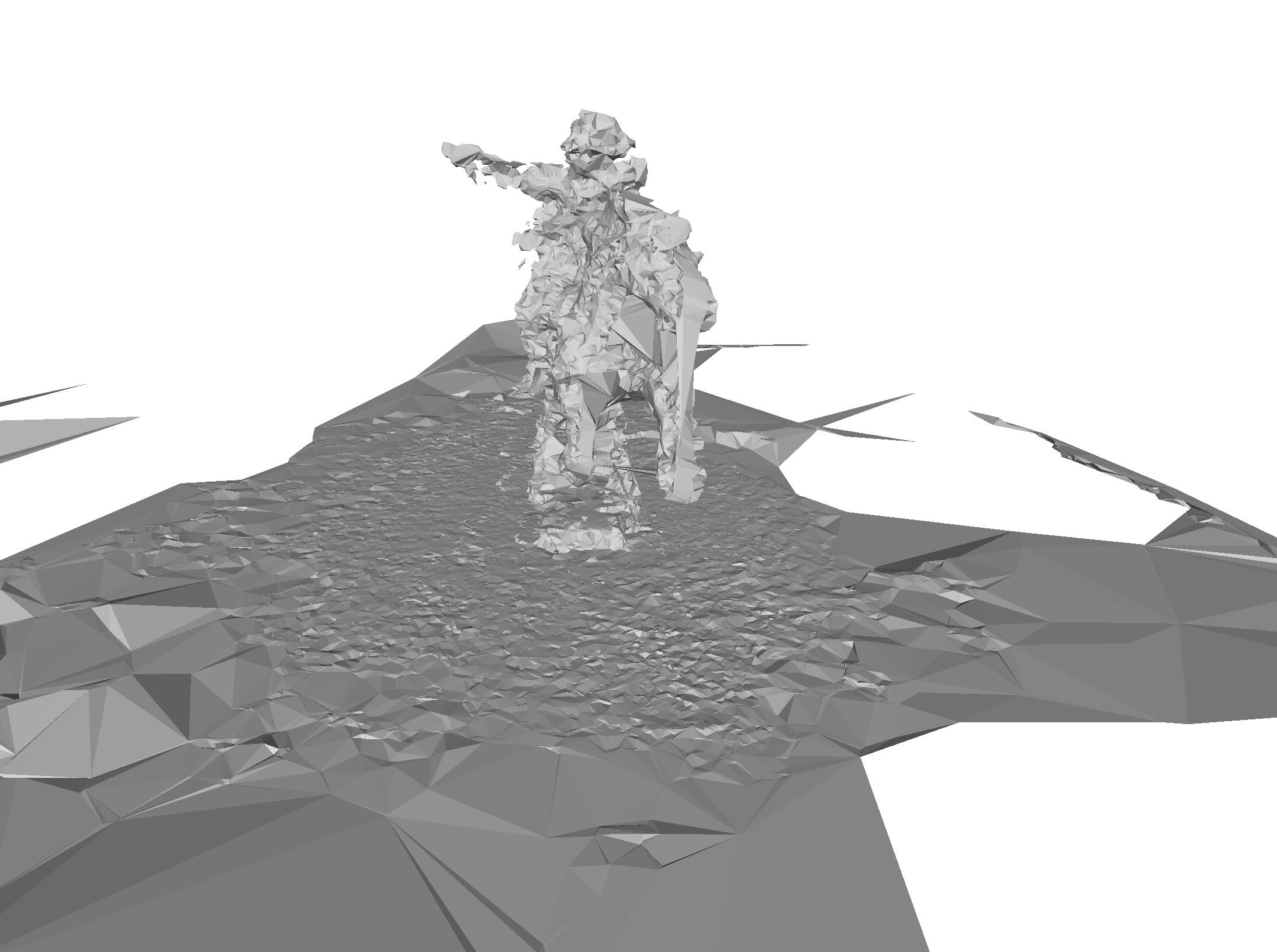

| COLMAP Poisson |  |

|

|

| MASt3R |  |

|

|

| VGGT |  |

|

|

I used the iOS app Polycam with a Pro subscription and an iPhone XS (no LiDAR) to record objects and generate a mesh (.obj) and a pointcloud (.ply).

To guarantee a consisten quality across all images, I placed the object on a electric turntable and put my iPhone on a tripod next to it.

The result with Polycam is surprisingly good even though Polycam uses only SfM and no LiDAR with the iPhone XS. Even details like the barrel of the rifle or the sword's blade are well reconstructed. Only small imperfections are visible at the horse's tail and at the bottom, where a part of the ground is visible:

COLMAP was fed with the same input images like Polycam. The resulting pointcloud and meshes have a much lower quality and more noise than the mesh Polycam generates, though.

The colored pointcloud, generated by COLMAP is quite noisy but still recognizable as the original object. Although, there is a black silhouette around the outer edge of the cloud, where COLMAP thought the black background is part of the object. These black artitifacs are also noticeable in the poisson mesh (see below).

The result of the delaunay algorithm is very noisy and the shape of the object is very jittery. This is probably because of the noise of the Pointcloud. The delaunay algorithm is probably best used with very clean pointclouds.

The mesh generated by the poisson algorithm is the best result from COLMAP, even though it is not comparable to the mesh generated by Polycam, qualitywise. The mesh is smoother than delaunay's. In addition to that, it has black blobs around the outer edge, resulting from the black bits of the pointcloud (see above).

MASt3R's AI model gave the worst results of the methods I tested. When using the same input images as used in Polycam, it wasn't able to determine the camera positions at all. That was probably because I had the model on a turntable with the background visible. So the static background with the rotating model compromised the feature matching. Only after removing the background of each input image with meta's Segment Anything, I was able to generate a pointcloud that somewhat resembled the original model.

VGGT was the better AI model. With the same input images (removed background) as used in MASt3R, it gave a much better result, although not as good as in Polycam.

Do we still need COLMAP (feature detection) or are the AI models good enough?

At least with the dataset I used, I had much better results with Polycam (SfM, no LiDAR), than with the AI models MASt3R and VGGT. Keep in mind, that I don't know if Polycam only uses regular SfM like COLMAP does, or if they combine it with other methods or even AI. I also want to add that I used the Pro version of Polycam which costs around 200€ per year. So a better result than free software was expected.